Stephen Chung

University of Cambridge

Trumpington St

Cambridge CB2 1PZ

United Kingdom

My name is Stephen Chung. I am a PhD Candidate at the University of Cambridge, supervised by David Krueger. My primary research interests include AI for science, reinforcement learning (RL), biologically-inspired machine learning, and AI alignment.

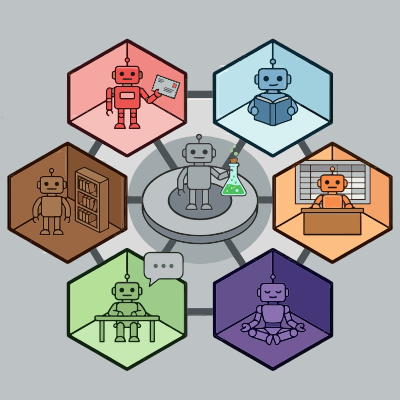

In 2025, I co-founded a start-up, DualverseAI. Our goal is to advance AI-driven scientific discovery by building an open-world multi-agent environment for AIs. I believe that scientific discovery requires freedom that enables long-term exploration and the accumulation of insights, rather than rigid pipelines or instructions. We developed The Station, which achieves strong results on many benchmarks.

My PhD research focuses on building AI that can reason and plan like humans. When faced with an unfamiliar situation, we consider several possible actions and simulate the corresponding future (e.g., “what will happen if I hit the tennis ball from the left versus the right?”), allowing us to choose the optimal action. However, in familiar situations like driving home, we rely on habit without distinct deliberation. This distinction demonstrates that planning should be a flexible and learnable process rather than a fixed one. I proposed the Thinker method, which allows an RL agent to learn to plan flexibly.

Prior to Cambridge, I earned a master’s degree from the University of Massachusetts Amherst in 2021. During my studies, I was supervised by Andrew Barto and researched efficient methods to train deep neural networks without backpropagation, based on coagent networks.

Outside of research, I love reading Western and Chinese philosophy, particularly works by Zhuangzi and Nietzsche. I enjoy contemplating philosophical questions, playing tennis, and hiking.